We have a web based analytics platform running under IIS/ASP.Net MVC/WebAPI hosted in EC2 or on physical boxes that we wanted to integrate R as an exploratory data tool for data analysts and research statisticians. We've developed a notebook-style front-end that sends chunks of R code to the back-end and displays the console output as well as any plots produced during the request. We currently output the plots as svg via the device PInvoke layer, but we'll be adding a png device to handle large svg's >50Mb (one plot is larger than 2gb of svg data). Because we are multi-user, we required per-session (or per-user, depending) R instances and we also wanted the ability to stop an instance and restart an instance, even if we lost the per-session data while doing so.

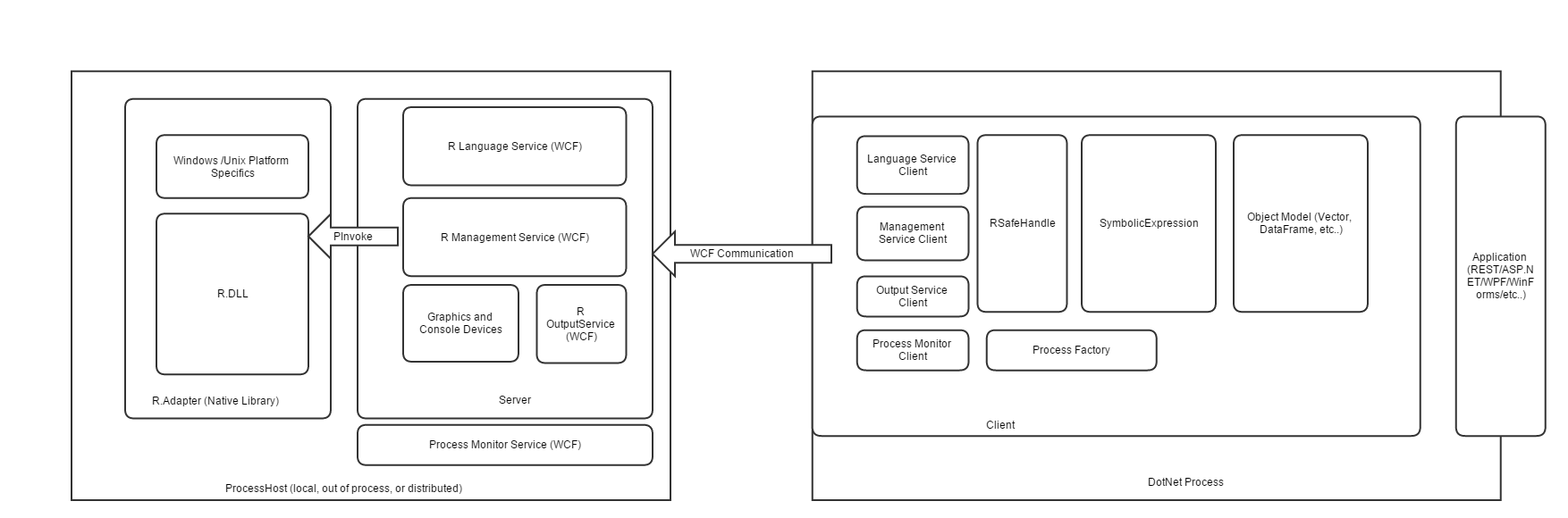

At the time I knew nothing about the mechanics of embedding R and nothing about the structure of R.Net. With that in mind, I chose to use WCF as our IPC mechanism and use a lightweight .exe as the host for the R process and WCF services. One of the problems with using WCF as the IPC mechanism is that the WCF hosting options weren't geared for WCF services that could be dynamically created and shut-down at will. Using a lightweight .exe for that solved those problems, but also required the implementation of a broker for creating and destroying the processes.

![Architecture Diagram]()

Initially, I thought that the R API was exposed via a flat PInvoke layer and the object model was built on top of that, so I thought I would just shim in a WCF wrapper around the R API and I wouldn't do much with the object model. That turned out to not be the case, and I pulled all the R calls down to a flat layer with the object model calling into that flattened view. I did break up the API into three loose chunks for granularity; one for dealing with SEXP's and R objects, the other for managing the R runtime, and the last for dealing with console and graphics output.

I also ran into issues with platform specific pointer and memory arithmetic, mostly inside the vector objects. I didn't want to make assumptions about the size of things in the out-of-process R instances, so I pushed all that logic out of the object model also. There were also some platform specific management routines, like setting the memory limit, that got repackaged into a windows or unix layer and can't be seen from the client side.

I didn't like the object model inheriting from SafeHandle, so all handles are aggregated into the parent object, which is usually a "Symbolic Expression". Generally, I like the smallest object I can have to end-up in the finalization queue.

I changed the way the graphics system gets initialized, and devices are part and parcel of the server system. In reality, we only need a couple of devices and if someone wants to add a special one, that can be done and integrated as a PR.

Others have talked about wrapping R.Net in WCF from the outside and I think that's ok, but if you're using the object model, you'll have similar issues and you'll have to marshal memory from .Net to WCF to .Net to R, instead of .Net to WCF to R. If you're just looking for executing strings and getting output, it doesn't matter much, but if you're integrating C# and R, I think it does.

Low priorities for us are the C# object model and cross-platform code, so that' fairly messed up.

I'm a fairly strict TDD advocate, so I had some issues working on this. I'm cleaning up the state of tests and pulling over the R.Net tests that I think are valuable. I was planning to open up my changes on github after I had completed those tasks this coming week.

At the time I knew nothing about the mechanics of embedding R and nothing about the structure of R.Net. With that in mind, I chose to use WCF as our IPC mechanism and use a lightweight .exe as the host for the R process and WCF services. One of the problems with using WCF as the IPC mechanism is that the WCF hosting options weren't geared for WCF services that could be dynamically created and shut-down at will. Using a lightweight .exe for that solved those problems, but also required the implementation of a broker for creating and destroying the processes.

Initially, I thought that the R API was exposed via a flat PInvoke layer and the object model was built on top of that, so I thought I would just shim in a WCF wrapper around the R API and I wouldn't do much with the object model. That turned out to not be the case, and I pulled all the R calls down to a flat layer with the object model calling into that flattened view. I did break up the API into three loose chunks for granularity; one for dealing with SEXP's and R objects, the other for managing the R runtime, and the last for dealing with console and graphics output.

I also ran into issues with platform specific pointer and memory arithmetic, mostly inside the vector objects. I didn't want to make assumptions about the size of things in the out-of-process R instances, so I pushed all that logic out of the object model also. There were also some platform specific management routines, like setting the memory limit, that got repackaged into a windows or unix layer and can't be seen from the client side.

I didn't like the object model inheriting from SafeHandle, so all handles are aggregated into the parent object, which is usually a "Symbolic Expression". Generally, I like the smallest object I can have to end-up in the finalization queue.

I changed the way the graphics system gets initialized, and devices are part and parcel of the server system. In reality, we only need a couple of devices and if someone wants to add a special one, that can be done and integrated as a PR.

Others have talked about wrapping R.Net in WCF from the outside and I think that's ok, but if you're using the object model, you'll have similar issues and you'll have to marshal memory from .Net to WCF to .Net to R, instead of .Net to WCF to R. If you're just looking for executing strings and getting output, it doesn't matter much, but if you're integrating C# and R, I think it does.

Low priorities for us are the C# object model and cross-platform code, so that' fairly messed up.

I'm a fairly strict TDD advocate, so I had some issues working on this. I'm cleaning up the state of tests and pulling over the R.Net tests that I think are valuable. I was planning to open up my changes on github after I had completed those tasks this coming week.